Hey there, stranger!

I’m Jay Versluis, and I do a whole bunch of stuff on the internet. This site is an attempt to bring my projects closer together, and to make a note of things I don’t want to forget. You know, tips about 3D apps, creative software, video editing, inspiration, and some personal ramblings too.

I also make videos about software on a variety of subjects, from quick tips to full courses. Take a look at the top menu for an overview.

I like web development, video games and a bit of coding too, but I have a whole other website for that.

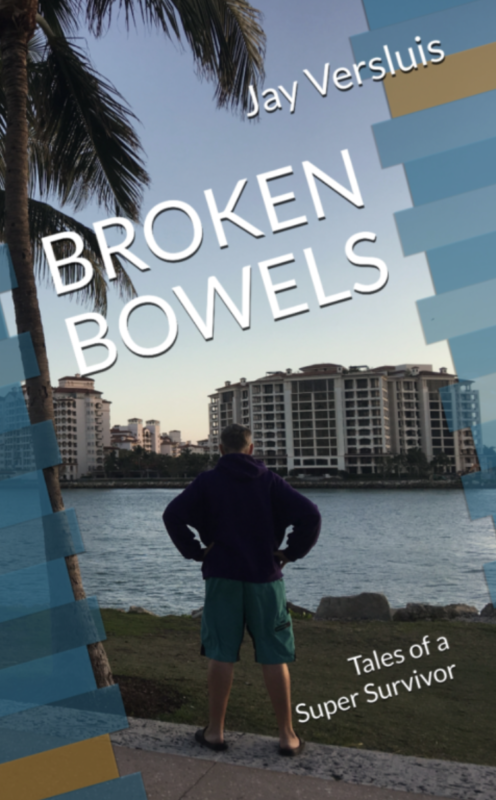

I’m a Super Survivor

In 2016 I nearly died from a complicated case of colon cancer. I’ve spent several years going through treatment hell and have survived against all odds. Thanks to a crack team of doctors, and a new treatment called immunotherapy I made a truly miraculous recovery.

My doctors call me a Super Survivor. In fact, my case and my story were so impressive that I’ve written this book about it. I have a separate website about this topic, complete with post-treatment diary.

If you’re interested in that part of my life, check out the quick facts here, or visit my other website: supersurvivor.tv.

Latest Video

Here's my latest YouTube video, brought to you by some magic I found here.

You can find many other videos either on my dedicated Watch Page, or from the drop down menu at the top. They're grouped into playlists to aid discoverability.

If you're a supporter, you get to watch most videos without ads! You'll need to register here, and if I can link your name or email to a recent donation, you'll be able to login on this site and all those pesky ads shall disappear.

Take a look at how to support me here.

Latest Articles

This is what I've been writing about lately. You can read more articles either on my Blog Feed, or take a look at the top menu for a full table of contents. There's a list of posts filtered by topic, or if you're looking for anything in particular, use the search function in the menu.

- Make one morph follow another from a different mesh in iClone

- Building a Fetch Quest with Story Framework

- How to blend between the Player Camera and a Custom Camera in Unreal Engine

- Creating custom Pop-up Notifications in Story Framework

- Implementing Story Choices in Story Framework

- Creating Interior Dialogues with Subtitles in Story Framework

What else do I do

I'm a broadcast professional by trade, with a background in film and video engineering. I've had various jobs in film and live television for 20 years, ranging from telecine colourist to editor, tape jockey and satellite feed coordinator. I'm essentially "the guy who makes things happen" behind the scenes.

Reputable clients include the BBC, MTV, IMG, CNN, Cartoon Network, Reuters, NHK and many other companies that no longer exist.

Fun fact: Although I'm not at all into football (soccer), I helped bring the English Premiere League to over 2bn+ viewers worldwide every weekend for over a decade.

I'm also a loving husband of 21 years and counting! Julia and I met through a BBC job at the dawn of the millennium in a club-come-TV-studio at the Swiss Centre in London. We got married in Las Vegas in 2004 and haven't looked back. Julia has been with me through my whole cancer journey every step of the way. I couldn't have done it all without her love and support.

These days we both work from home, spending quality time while learning new 3D techniques, sharing knowledge about software and enjoying every minute life has to offer. I'm glad I can take you all with me on this journey, make you smile or perhaps inspire you along the way.

Let's stay in touch!

I do a lot of things across a variety of sites and services on what's slowly becoming The Metaverse. If you'd like to keep an eye on all these things in one place, take a look at my Twitter feed. I post new videos and articles there.

You can also subscribe to YouTube and Twitch notifications (that overused bell icon), or sign up to receive new articles from this site via email if you like:

I also offer some behind-the-scenes posts, early access videos and other things on Ko-fi and Patreon. If you want to say thanks or buy me a coffee, those places are perfect! You can also support my work in various other ways if you like, including shopping via affiliate links.

I no longer maintain a public email or contact form. The spam just got too much. If you want to get in touch, DM me on any of the social networks or leave a comment on an article (preferable on topic).

Thanks for stopping by 💖🙏